Conversion Hotel is not your everyday conference. It lasts for 3 days, on an island, and it has leading speakers from all over the world. No wonder it’s always sold out in no time.

So for those of you who couldn’t get a ticket for 2017, here are the key insights.

- Make sure you get the basics right. Too many companies invest millions of dollars in ‘sexy stuff’ like personalization and machine learning when they don’t even have their analytics configured right. Money down the drain.

- User testing is not useless testing, if you do it right. Recruit the right users, write a good scenario and be a kick-ass moderator.

- We make decisions based on emotions. There’s a science behind storytelling you can use to your advantage.

- Befriend your analysts, they’re nerds but also cool people.

- Results are gained by small, continuous improvements, inch by inch.

- Be aware of the ethics of your job. You’re influencing people. Are you sure you should be?

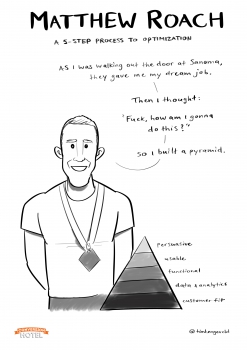

1. Matt Roach (Sanoma Publishers)

If you’re a conversion optimization expert, you know that it’s hard work. You have to know about a LOT of disciplines, all at the same time.

Especially if you’re just getting started in CRO, you’ve probably asked yourself these questions: how can I become an expert in so many fields? How do I know what to do first? And how am I supposed to remember everything?

Matt Roach gave us the answer at Conversion Hotel. His ‘Optimization Pyramid’ makes sure you don’t miss a step, and get the best results.

These are the levels of the pyramid, from the base to the top:

- Product Customer Fit: what does your customer need?

- Data & Analytics: what can and should you measure?

- Functional: does your website work for everyone?

- Usable & Intuitive: is it easy to use?

- Persuasive: does it convince your users?

Not sure you need this system? There are at least 5 reasons you do:

1. It’s a checklist

Follow this pyramid from the base to the top, and you know you’ve covered everything.

2. The order matters

Don’t jump straight to the top of the pyramid, like so many companies do. Persuasion and personalization is just the sexy stuff. The big gains are made at the bottom of the pyramid. So first get the basics right: what does my customer need, what are my KPIs and how do I measure them?

3. It helps you prioritize

A/B testing only comes in at the top of the pyramid. There’s a lot more you can do before that. A/B testing is not the same as conversion optimization. It’s only one aspect of it.

4. It helps you to convince management

It’s a great way to calculate budget and outcome, to show management you mean business.

5. It works for any project you want to get started with

This framework works for anything you want to release, just try it out.

A/B testing is not the same as conversion optimization. It’s only one aspect of it.

2. Els Aerts (AGConsult)

Follow Els Aerts on Twitter: @els_aerts

First of all: there are a lot of misconceptions about user testing that Els wants to get rid of:

- You should NOT ask “How does that make you feel?”

- A focus group is NOT a user test.

- User testing is NOT useless testing – if you do it right.

So how do you do it right? 3 tips:

1. Recruit the right users

Don’t ask your partner, your mum or your best friend to test your website. They love you too much to be critical, and they’ll try harder to complete a task. Test with strangers instead.

Don’t focus on the exact demographics. Get people in who belong to your target audience: clients or potential clients. People who are interested in the stuff you’re selling. Do focus on diversity in age, ethnicity and gender.

And keep in mind somebody’s device preference. Are you asking an Apple fan to test on an Android phone? Then you’ll never get reliable results.

2. Write a good scenario

The best starting point is data. Do you see huge drop-offs on your website that you can’t find an explanation for? That’s a great place to start with a user test.

Ask open questions. Don’t make questions too specific. Never ask something like: “Buy a red party dress in size M.” You don’t know whether the test participant likes to wear dresses, the color red or what size they are. Leave a task open enough for a user to make it their task.

Tailor your questions. Make small talk with your test participants before you start the test. Find out a bit about them. That way, you can personalize the tasks you set: “You said you like going away on short trips with your best friend. Imagine you’re about to plan a trip right now, where would you go?”

Mix up your questions. If you always ask the same questions in the same order, you won’t get reliable results. Because by the time you get to the last question, users will have gotten used to your website and complete that task faster.

3. Be a kick-ass moderator

A moderator plays 4 roles at once:

- Flight attendant: help the user feel at ease

- Sportscaster: make sure everyone in the observation room can follow

- Scientist: observe what the user does and doesn’t do

- Switzerland: be neutral

99% of the talking during a user test should be done by the user. Or in other words: shut the **** up.

3. David JP Phillips

Volg David JP Phillips op Twitter: @davidjpphillips

The core message: we make decisions based on emotions.

So if you can tell a story that makes people emotionally attached to your product or service, they will buy.

How, exactly?

His (shorter) TED talk gives you an idea:

Do you remember the last time you fell in love? … No one? Ouch.

4. Bart Schutz (Online Dialogue)

Follow Bart on Twitter: @barts

3 key quotes worth remembering:

1. “I hate personas.”

Don’t make up personas and look for them in the numbers. What you can do, is dig into the data, analyze different types of behavior and create behavioral personas that way.

2. “Don’t just treat desktop and mobile users the same way”

For a hostel booking website, Online Dialogue found out there were huge differences in behavior for desktop and mobile users:

- On mobile, people were goal-oriented: I want to book a hostel now

- On desktop, people were shopping around: where should we go and what are the options?

For example: in one test, they left out ‘extra’ information such as testimonials and pictures. A winner on mobile, but not on desktop.

3. “Be aware of the ethics of online experimenting”

An example: for that same hostel booking site, they added elements to suggest a place was safe. This turned out to convince more women to book.

But the problem is, they didn’t have details about the actual safety of the place. We know how many ‘incidents’ there have been, for example “Only 2 incidents in the past year.”

But we don’t know whether it was a matter of noise disturbance … or, worst-case scenario, rape.

Would you still recommend this hostel to women if you know it could be the second?

5. Michele Kiss (Analytics Demystified)

Follow Michele Kiss on Twitter: @MicheleJKiss

Analytics and conversion optimization seem like different things, but they have a lot in common. Most importantly: they are both used to test hypotheses.

And there are at least 4 ways analytics can help you in conversion rate optimization:

1. Find your success metrics

You can only test what you can measure. So dig into those numbers and find out what metrics determine a successful test.

2. Discover test ideas

Analytics can tell you where the bottlenecks are on your website: where are people leaving in your flow? What form fields are people dropping off at?

3. Prioritize test ideas

How much work is it and how much money is it going to make you? Calculate the impact to decide which tests to run first and to convince management. And don’t forget to track the actual results to see whether you were right and to forecast the impact more accurately next time!

(Read more on how this works exactly on Michele’s blog)

4. Better control

One of Michele’s clients redesigned their site, but the new version performed 3% worse. Analytics can help you find out why.

In this case, bandwidth was one of the main drivers. The newer version had animation and the site wasn’t performing well for anyone with a slower connection.

Caution: digging into Google Analytics does not give you permission to judge a test by “anything positive you can find”. Don’t just give a spin to a test to prove it was a success. Determine your success metrics and stick to them.

Befriend your analysts. We’re nerds, but we’re also cool people.

6. Ronny Kohavi (Microsoft)

Follow Ronny Kohavi on Twitter: @RonnyK

Ronny Kohavi is general manager Analysis and Experimentation at Microsoft.

- Every experiment they run, is exposed to millions of users, sometimes tens of millions.

- They run about 300 of them … per week (and every multivariate test is counted as just 1 test).

- That’s 15,000 A/B tests a year.

Few people on the planet have as much experience in A/B testing as Ronny does.

Here are 5 lessons he’s learned:

1. Agree on a good ‘overall evaluation criterion’ (OEC)

A good overall evaluation criterion is defined by short-term metrics and predicts long-term value.

An example: sending out more emails might give you more conversions right now, but it might also push more people to unsubscribe. So you lose in the long run, because you won’t be able to email those people anymore in the following years.

Make sure you know exactly what success means. Say you want to optimize your support page for ‘time on site’. Does that mean you want them to spend more time on your site, or less?

2. Most ideas fail

Of all the tests run at Microsoft, only one third turns out positive, one third is negative, and one third does absolutely nothing.

Keep that in mind. Don’t expect all of your tests to be successes.

3. Small changes can have a big impact

- The Bing server got 100 msec faster: +18 million dollars revenue annually

- Putting the first sentence of the description tag next to the title tag in the search results in Bing: +120 million dollars revenue annually

But these are rare gems among tens of thousands of experiments. Which brings us to lesson number 4:

4. Most progress is made inch by inch

Changes rarely have a big positive impact on key metrics. Winning is done by small continuous improvements, inch by inch.

Extra: keep Twyman’s law in mind. Any figure that looks interesting or different is usually wrong.

Examples:

- If you have a mandatory birthday field in your form and people think it’s unnecessary, you’ll see a lot of people being born on 11/11/11 or 1/1/1.

- If you have mandatory profession field with an alphabetical dropdown, you’ll have a ton of astronauts.

- If you saw a doubling in traffic on your US website between 1-2 AM on Sunday Nov 5th, it’s because of daylight savings time.

Winning is done by small continuous improvements, inch by inch.

5. Validate the experimentation system

Getting data is easy. Getting data you can trust, is hard.

So run A/A tests and validate every test.

More info? Download Ronny Kohavi’s slides here (pptx, 4.5 MB).

A big shout out to Tim Hengeveld who made the awesome sketch notes!

Leave a Reply